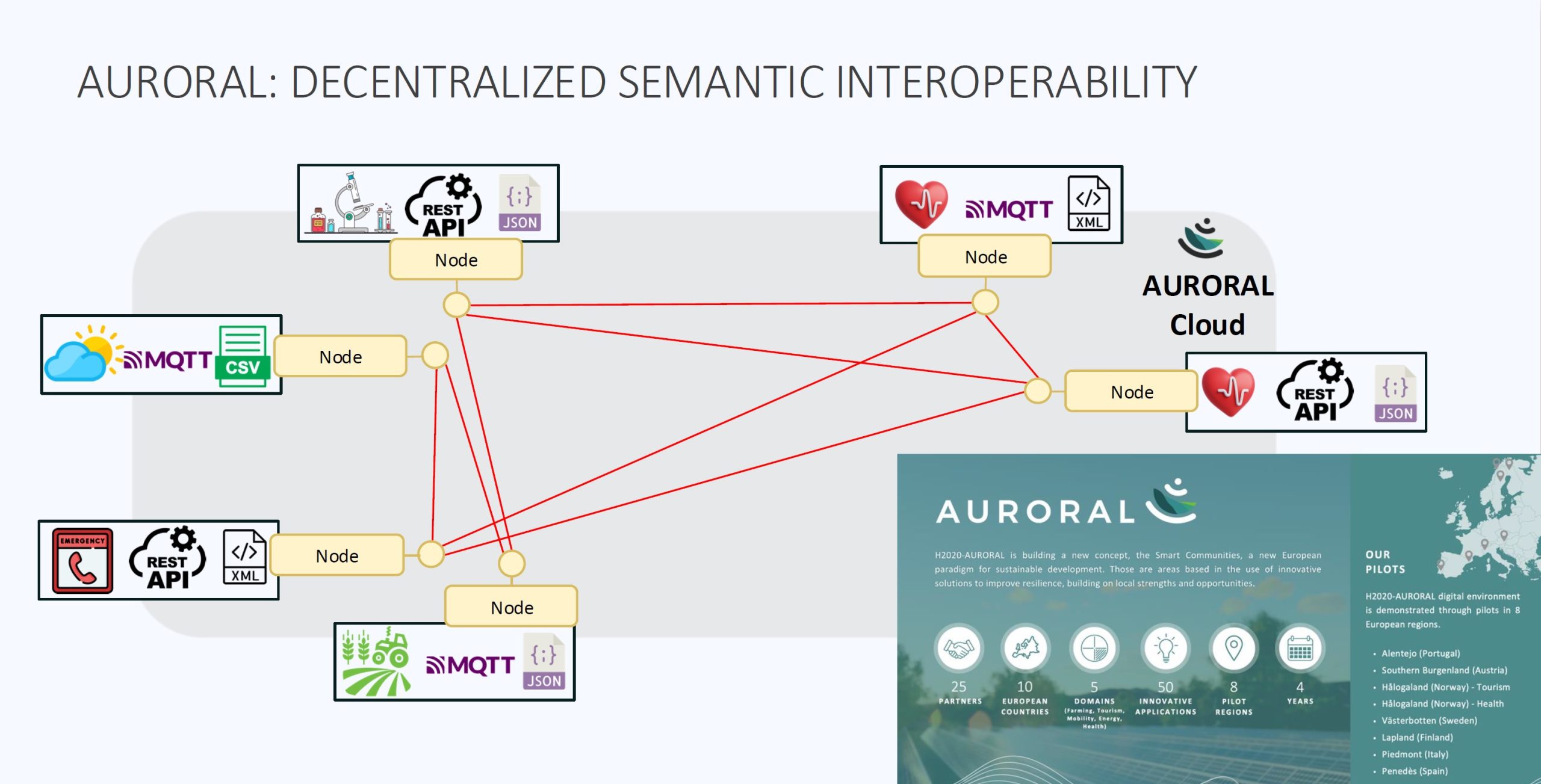

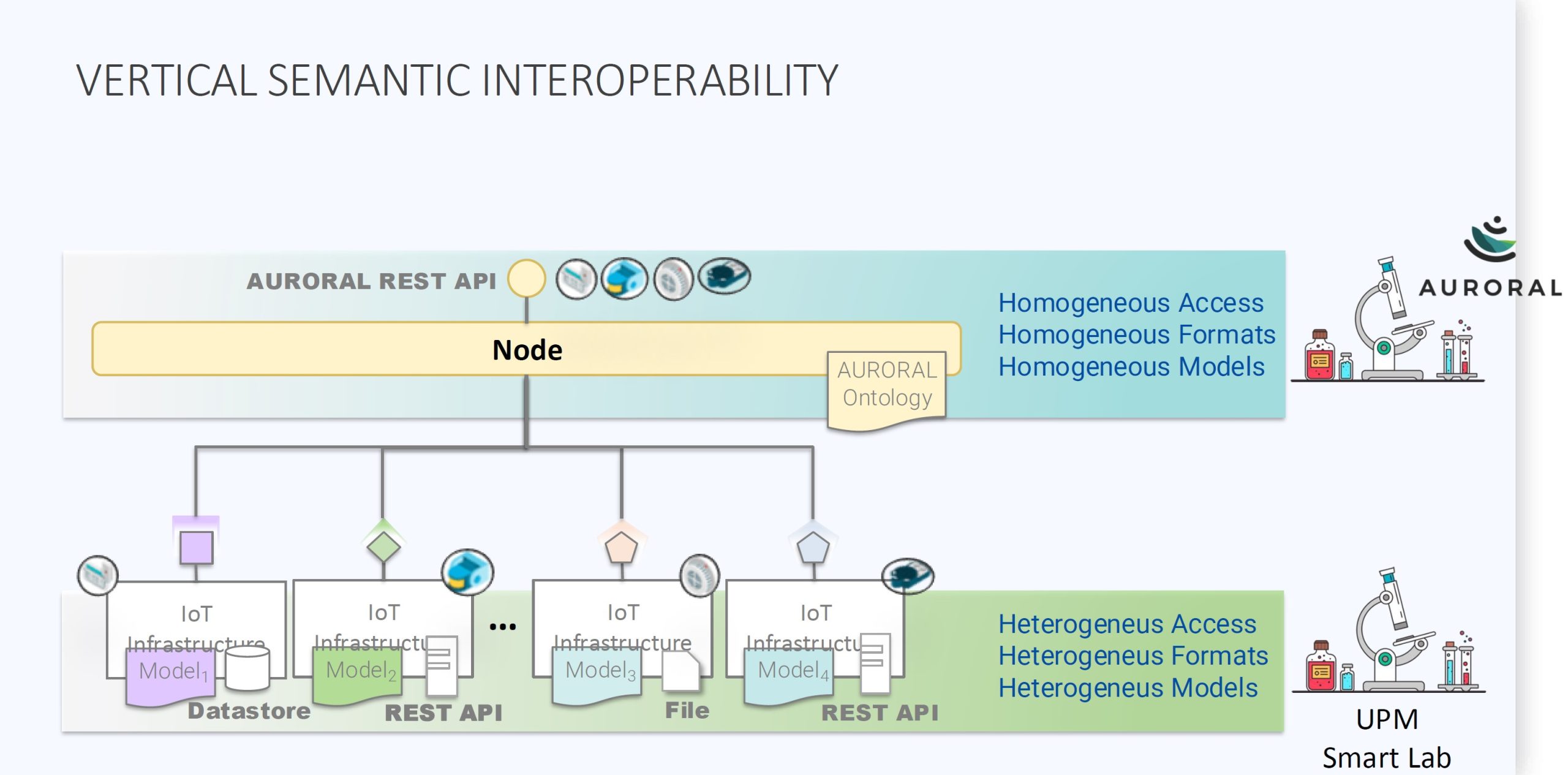

The AURORAL H2020 project aims to enhance semantic interoperability in rural areas, ensuring consistent data understanding and usage among heterogeneous actors and systems.

It facilitates interoperability between decentralised nodes that, following a set of shared ontologies based on standards, take care of data normalization, discovery, validation, and of enforcing user-defined privacy policies over the data

Semantic Interoperability In Auroral

Semantic Interoperability is the ability of computer systems to exchange data with unambiguous shared meaning. For explicitly representing shared meaning, ontologies play a key role. Other kinds of data models typically focus only on the syntactic representation, and the semantics is implicit or externally defined. Without clearly understood semantics, the reuse of data is not possible, resulting in a fragmented landscape of data silos that impede the development of cross-application and cross-domain data infrastructures. Thus, semantic interoperability is of critical importance to open markets of services and data.

Auroral Decentralized Semantic Interoperability

Vertical Semantic Interoperability

Artificial Intelligence in general and generative AI based on large language models (LLMs) in particular are changing many aspects of our daily lives. Thus, the relation between generative AI and semantic interoperability needs further investigation. In what respect can generative AI help achieve semantic interoperability, or, the other way around, how can semantic interoperability support generative AI, or does generative AI even render semantic interoperability obsolete?

As more aspects of life become digitised, the complexity of systems and their need for interaction increase. To better manage this complexity, the concept of digital twins can be applied, which complements real-world assets, systems, and concepts with a digital counterpart. Digital twins provide additional capabilities like prediction, simulation, and automation, enabling us to manage the digital world as they directly correspond to what we know from the physical world. Semantic interoperability is key for digital twins.

OMG Standards Work Relevant to Digital Twin Semantics

The Object Management Group (OMG) is a member-driven consortium created in 1989 to promote model-driven architectures and languages. Some of the best-known business and systems modeling languages (UML, BPMN, SysML) were created by OMG members and have since become ISO standards. OMG is also the parent organization of the Digital Twin Consortium, founded in

2020.

Recent work that is relevant to the subject of semantics for digital twin interoperability includes Version 2 of the Systems Modeling Language, based on a formal semantics core, ontology mechanisms, such as the Commons Ontology Library and the Multiple Vocabulary Facility; and an upcoming specification for data exchange in the manufacturing domain. The presentation will describe those efforts and how OMG goes about creating its standards.

A Standard Ontology Library of Patterns for Cross-Domain Reuse

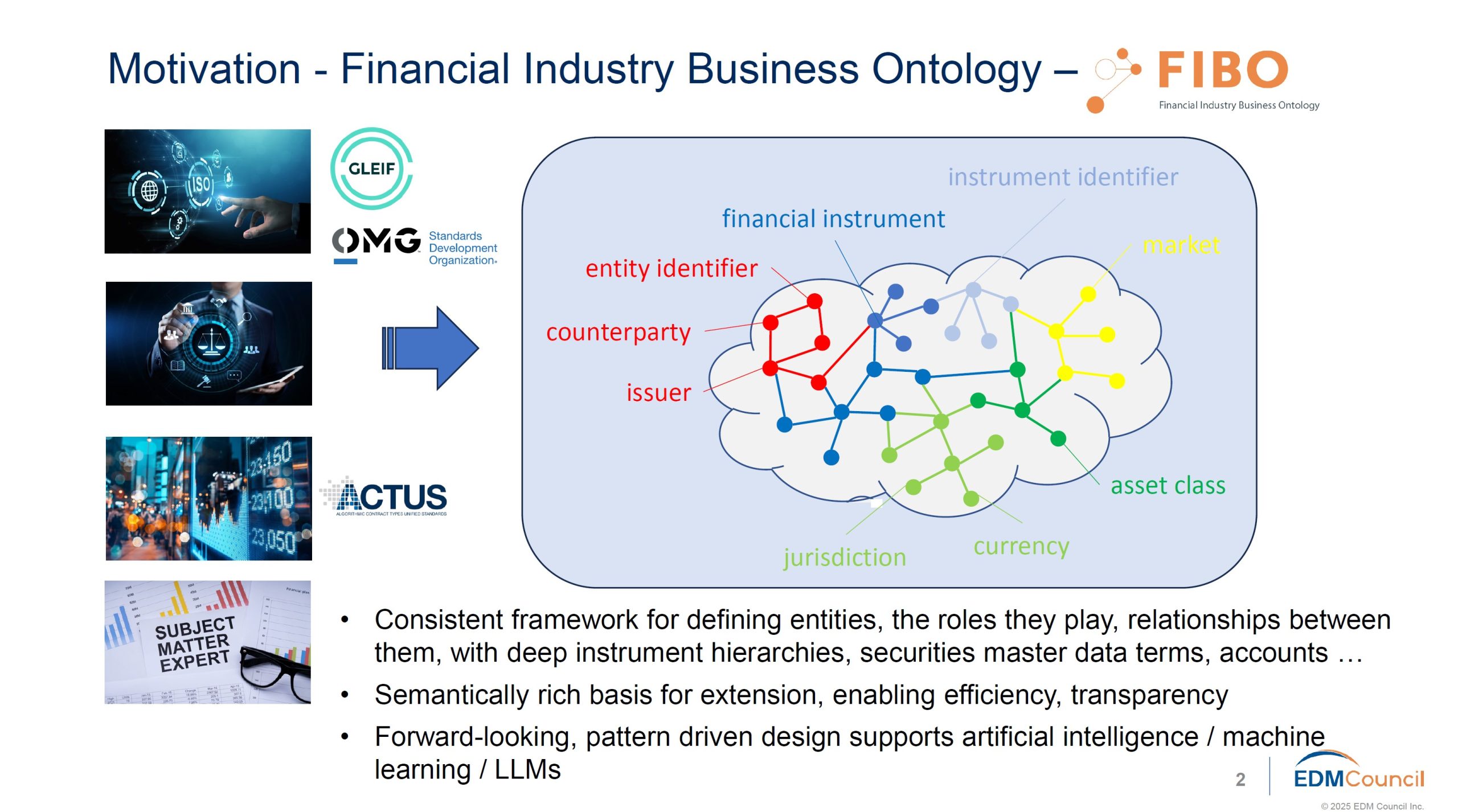

Virtually every organization world-wide is either using or learning to use machine learning (ML) and large language models (LLMs) to improve productivity and address scalability challenges today. Robotic process automation, ML, and LLMs are dramatically changing the way people work.

Development of digital twins that leverage these and other AI-based technologies is rapidly becoming a critical precursor to building anything ‘in the real world’ today. Yet, the AI models and tools often lack transparency, governance, and interoperability with one another. It can be difficult to understand what information was used to formulate answers, including the timeliness, reliability, IP encumbrances, and other characteristics of the data.

The most effective approach to providing metadata and semantics to address these challenges and enable interoperability is through well-designed ontologies and knowledge graphs as key components of the underlying data fabric. The ontologies provide structure for and across data sets, enable federated query support, and facilitate data quality, provenance, and other analyses. Yet, ontologies can be expensive to build, and require collaboration across teams inside and across organizations.

More details: here

More in depth slides can be viewed here:

- OMG standards relevant to digital twins

- OMG Commons Ontology Library and related standards

- On Digital Twin Semantics

- Virtualization of IoT devices and the development of Digital Twins software stack in the NEPHELE project

- Semantic interoperability approach of the AURORAL H2020 project